Best practices for gaming sites to vet user-submitted game mods for safety & quality?

The Critical Need for Robust Mod Vetting

User-submitted game mods are a cornerstone of many vibrant gaming communities, extending game longevity and offering boundless creativity. However, the open nature of user-generated content also introduces significant risks, including malware, stability issues, inappropriate content, and poor quality experiences. For gaming sites hosting these mods, implementing stringent vetting processes is not just good practice; it’s essential for protecting users, maintaining platform integrity, and fostering a healthy ecosystem.

Effective mod vetting strikes a balance between encouraging creativity and ensuring a safe, enjoyable experience for all players. This requires a comprehensive strategy that evolves with technology and community needs.

Establishing Clear Guidelines and Policies

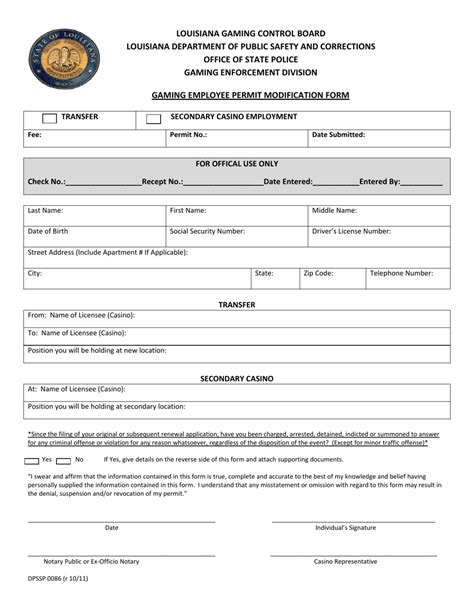

The foundation of any successful mod vetting system is a well-defined set of guidelines and policies. These documents must be easily accessible, unambiguous, and cover all aspects of mod submission, from technical requirements to content restrictions. They should clearly state what constitutes acceptable behavior and content, outline prohibited items (e.g., copyrighted material, exploits, malicious code, hate speech), and detail the consequences for non-compliance.

These guidelines not only inform mod creators but also empower the community to understand and report violations effectively. Regular updates to these policies are crucial to adapt to new trends, technologies, and potential threats.

Implementing a Multi-layered Review Process

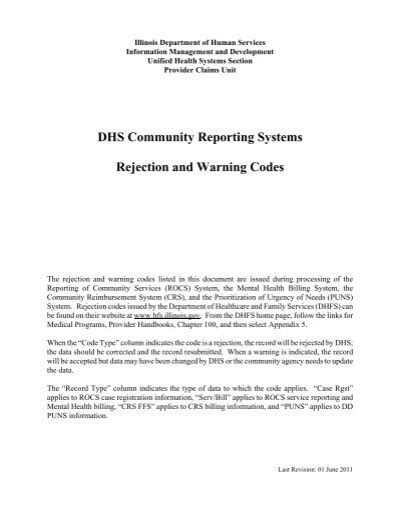

Automated Security Scans

The first line of defense for any user-submitted content should be automated scanning. This involves using sophisticated tools to detect known malware, viruses, trojans, and other malicious code within mod files. Automated systems can also identify suspicious file types, unusual file structures, and potentially exploit-laden scripts. Leveraging AI and machine learning can enhance these scans, allowing for the detection of novel threats and patterns associated with harmful content at scale.

Automated checks are critical for initial filtering, reducing the workload on human moderators and catching overt threats before they reach the community.

Manual Moderation and Quality Assurance

While automation is powerful, human oversight remains indispensable. Manual review teams are vital for assessing subjective quality, functionality, and adherence to nuanced content guidelines that automated systems might miss. This includes testing mods for stability, performance impact, compatibility with the base game, and overall user experience. Moderators also verify that the mod’s description, images, and other metadata accurately represent its content.

Manual review is also crucial for identifying inappropriate visual or textual content, ensuring lore-friendliness (if applicable), and confirming that mods don’t introduce unfair advantages or break game balance in unintended ways. A structured review checklist can help ensure consistency across all manual evaluations.

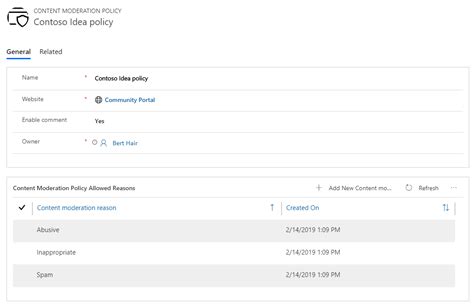

Empowering the Community in the Vetting Process

A proactive community can be an invaluable asset in mod vetting. Gaming sites should provide robust and easy-to-use reporting tools that allow users to flag mods they believe violate guidelines, contain bugs, or pose security risks. An effective reporting system includes clear categories for issues and provides users with a mechanism to describe their concerns in detail.

Furthermore, implementing user rating and review systems can highlight popular and high-quality mods while naturally pushing problematic ones to the background. Some platforms also benefit from a ‘Trusted Modder’ program, where established, reputable creators gain a level of pre-vetting or faster review for their submissions, based on a proven track record of safety and quality.

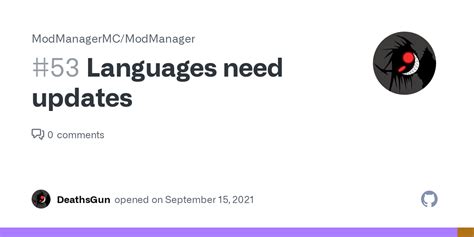

Version Control and Ongoing Monitoring

Mods are rarely static; they evolve with updates, bug fixes, and new features. A robust vetting process must account for this by requiring re-vetting for new versions of existing mods. Implementing a comprehensive version control system allows sites to track changes, compare different iterations, and ensure that new updates haven’t introduced previously removed issues or new threats.

Post-launch monitoring is also critical. Even after approval, a mod might develop issues due to game updates, conflicts with other mods, or changes introduced by the creator. Continuous feedback loops from the community and periodic re-evaluations can help identify and address these problems promptly, potentially leading to a mod being delisted or updated.

Transparency, Communication, and Education

Building trust with both mod creators and users requires transparency. Gaming sites should clearly communicate why a mod was rejected or removed, providing specific reasons and, if possible, guidance on how to rectify issues. This feedback loop is crucial for helping modders improve their creations and understand the platform’s standards.

Furthermore, educating the community about mod safety practices, how to identify suspicious files, and the importance of reporting issues fosters a more responsible and vigilant user base. Regular communication about platform updates, security measures, and policy changes keeps everyone informed and engaged.

Conclusion: Balancing Innovation with Integrity

Vetting user-submitted game mods is an ongoing, dynamic process that requires a combination of technology, human expertise, and community engagement. By establishing clear guidelines, implementing multi-layered review processes, empowering the community, and committing to continuous monitoring and transparent communication, gaming sites can create a safer, higher-quality environment for all. This approach not only protects users from potential harm but also fosters a thriving modding community, ensuring the longevity and continued evolution of beloved games.