How to score evolving live-service games fairly in our gaming reviews?

The Shifting Sands of Game Evaluation

Reviewing video games has long been a foundational practice in the industry, guiding consumer choices and celebrating artistic achievement. However, the advent and proliferation of live-service games have thrown a significant wrench into the traditional review machinery. Unlike their static predecessors, live-service titles are ever-evolving entities, frequently receiving content updates, balance changes, bug fixes, and even complete overhauls months or years after their initial release. This dynamic nature poses a profound challenge: how can reviewers assign a definitive score to a product that is constantly in flux?

The core dilemma lies in the impermanence of the “launch state.” A game might release in a barebones, buggy, or poorly balanced condition, only to transform into a masterpiece (or vice versa) through subsequent patches. A single, fixed score given at launch can quickly become irrelevant, misleading potential players who might encounter a vastly different experience later on. This necessitates a re-evaluation of our review methodologies to ensure fairness not only to the developers who continue to iterate but, more importantly, to the audience relying on our critical assessments.

Breaking Down the Traditional Review Paradigm

For decades, a game review was largely a snapshot in time. You played the finished product, assessed its graphics, gameplay, story, sound, and value, and then assigned a score reflecting that complete package. This model works perfectly for single-player, narrative-driven experiences that see minimal post-launch changes. For live-service games, however, this approach is fundamentally flawed.

Consider a game like No Man’s Sky, which launched to significant criticism but evolved into a beloved space exploration title years later. An initial low score, while accurate at the time, would hardly reflect the current state of the game. Conversely, a game might launch strong but deteriorate over time due to poor updates or aggressive monetization. The traditional fixed score fails to capture these trajectories, leaving readers in the dark about the true value proposition at any given moment.

Strategies for Initial Reviews: Setting the Baseline

When a live-service game launches, it’s crucial for an initial review to establish a baseline. This review should primarily focus on the foundational elements: the core gameplay loop, technical stability, art direction, initial content offering, and the immediate player experience. It should also critically assess the developer’s stated roadmap and monetization strategies, identifying potential red flags or promising directions.

Crucially, the initial review must communicate its inherent limitations. Reviewers should clearly state that the score reflects the game as it exists at launch and that the experience is expected to change. Transparency about the review process and the evolving nature of the game helps manage reader expectations and sets the stage for future updates or re-evaluations.

The Case for Dynamic Scoring or Re-reviews

To truly serve the audience and accurately reflect a live-service game’s journey, review outlets must adopt more dynamic approaches. There are several viable models:

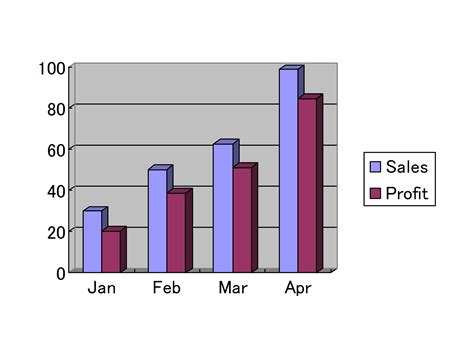

- Evolving Scores: A single score that is periodically updated. This requires continuous re-engagement with the game and diligent tracking of changes, along with clear version notes explaining score fluctuations.

- Supplemental Reviews/Updates: Maintaining the initial score but publishing new articles or “review diaries” that detail major updates, re-evaluate specific aspects, and offer updated impressions.

- Segmented Reviews: Assigning scores to different aspects (e.g., “Launch Content Score,” “Long-Term Potential Score,” “Monetization Score”) that can be updated independently.

Regardless of the chosen method, consistency and clear communication are paramount. Readers need to understand when and why a score might change or how subsequent content impacts the original assessment. This proactive approach ensures that our reviews remain relevant and valuable throughout a game’s lifespan.

Key Factors to Monitor in Ongoing Evaluation

When assessing updates or re-reviewing a live-service title, several critical areas warrant close attention:

- Content Additions: Quantity, quality, and originality of new maps, characters, missions, and modes.

- Technical Performance & Stability: Improvements in bug fixes, server stability, and overall polish.

- Balance Changes: How updates impact gameplay fairness, meta shifts, and player experience across different skill levels.

- Monetization Practices: Whether new cosmetics, battle passes, or in-game purchases are fair, predatory, or add genuine value.

- Community Engagement: Developer responsiveness to player feedback and transparency in communication.

- Longevity & Retention: How well the game maintains player interest over time, a key indicator of successful live-service design.

These factors collectively paint a picture of how a game is evolving and whether it’s truly delivering on its live-service promise.

Towards a Dynamic Review Methodology

The challenge of scoring evolving live-service games fairly is not easily overcome, but it presents an opportunity for review media to innovate. By moving beyond the static, one-and-done review model, we can better serve our audience and provide a more accurate, evolving reflection of games that themselves are in constant motion. This requires a commitment to ongoing engagement, transparent communication, and a willingness to adapt traditional critical frameworks to the realities of modern game development. The goal is not just to judge a game, but to track its journey and inform players at every significant milestone.