What strategies help gaming communities moderate mod descriptions and user comments effectively?

The Vital Role of Moderation in Gaming Communities

Gaming communities thrive on creativity, interaction, and shared passion. Mods, in particular, empower players to extend and customize their gaming experiences in incredible ways. However, with this freedom comes the critical need for effective moderation, especially concerning mod descriptions and user comments. Unregulated content can quickly lead to misinformation, toxicity, security risks, and a hostile environment, ultimately driving users away. Building a healthy, inclusive, and safe space requires a strategic approach that balances oversight with community autonomy.

Establishing Comprehensive Guidelines and Policies

The foundation of any successful moderation strategy is a clear, accessible, and comprehensive set of guidelines. These rules should explicitly cover what is acceptable and unacceptable for both mod descriptions and user comments. For mod descriptions, this includes requirements for accurate information, warnings about potentially mature or sensitive content, prohibitions against malicious software, and clear expectations for attribution. For user comments, rules must address hate speech, personal attacks, spam, harassment, and other disruptive behaviors.

These guidelines should be prominently displayed, easy to understand, and regularly updated. Transparency about enforcement policies, including a clear appeal process, helps build trust within the community and ensures that moderation decisions are perceived as fair and consistent. Education about these rules, rather than just enforcement, can also foster a more self-regulating community.

Utilizing Advanced Moderation Tools and Technology

Manual moderation alone is often insufficient for large, active gaming communities. Leveraging technology is crucial for scaling moderation efforts. This includes:

- Keyword and Phrase Filters: Automatically detecting and flagging inappropriate language in both descriptions and comments.

- AI and Machine Learning: Employing algorithms for sentiment analysis, identifying patterns indicative of spam, hate speech, or malicious intent, and flagging content for human review.

- Spam Detection: Tools that recognize and remove repetitive or unsolicited content.

- Version Control for Mods: Tracking changes in mod descriptions to quickly identify and roll back problematic alterations.

- Pre-moderation/Post-moderation: Implementing pre-moderation for all new mod submissions and potentially for comments from new users, while relying on post-moderation with robust reporting systems for established users.

These tools act as a first line of defense, reducing the workload on human moderators and allowing them to focus on more complex or nuanced cases.

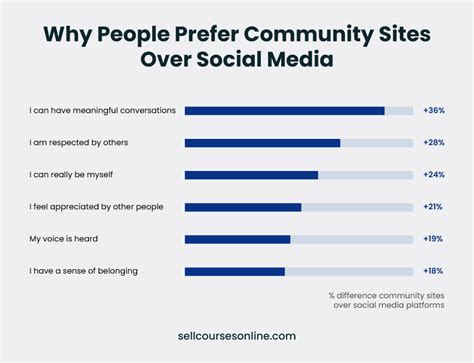

Empowering and Engaging the Community

A community-driven approach is invaluable for effective moderation. Empowering users to report problematic content significantly expands the moderation team’s reach. Key elements include:

- Easy-to-Use Reporting Systems: Making it straightforward for users to flag inappropriate mod descriptions or comments, with clear categories for issues.

- Volunteer Moderators: Recruiting trusted and active community members to assist with moderation, providing them with clear guidelines, training, and support.

- Feedback Loops: Informing users when action has been taken on their reports, which encourages continued participation and reinforces the idea that their efforts make a difference.

- Fostering a Culture of Respect: Encouraging positive interactions and discouraging negativity through community events, leaderboards for helpful users, and showcasing positive mod reviews.

By making users stakeholders in the health of their community, platforms can cultivate a more responsible and self-regulating environment.

Building Dedicated Moderation Teams and Processes

While technology and community reporting are essential, a dedicated team of professional moderators is the backbone of robust content moderation. This team is responsible for:

- Consistent Enforcement: Ensuring rules are applied uniformly across the platform.

- Complex Case Review: Handling nuanced situations that AI or community reporting might miss, such as veiled threats, subtle harassment, or context-dependent inappropriate content.

- Appeals Process Management: Fairly reviewing user appeals against moderation decisions.

- Staying Updated: Keeping abreast of new moderation techniques, emerging threats, and community trends.

- Mental Health Support: Providing resources and support for moderators who regularly deal with challenging and often disturbing content.

Clear escalation paths within the moderation team ensure that difficult cases receive appropriate attention and resolution.

Proactive Education and Open Communication

Prevention is always better than reaction. Platforms should proactively educate mod authors on best practices for creating safe and informative mod descriptions, including guidance on categorizing content, providing clear installation instructions, and declaring dependencies or potential conflicts. Similarly, educating users about the code of conduct and the impact of their comments can reduce violations.

Regular communication about moderation efforts, challenges, and policy updates helps maintain transparency and trust. This can include blog posts, community announcements, or direct messages. Establishing a clear channel for feedback from mod authors and users about moderation processes allows for continuous improvement and adaptation.

Conclusion: A Continuous Commitment to Community Health

Effective moderation of mod descriptions and user comments is not a one-time task but an ongoing commitment. It requires a dynamic interplay of clear policies, sophisticated technology, an engaged community, dedicated human oversight, and continuous education. By implementing these strategies, gaming platforms and communities can create vibrant, safe, and respectful environments where creativity flourishes, and every member feels valued and protected, ensuring the long-term health and growth of the gaming ecosystem.