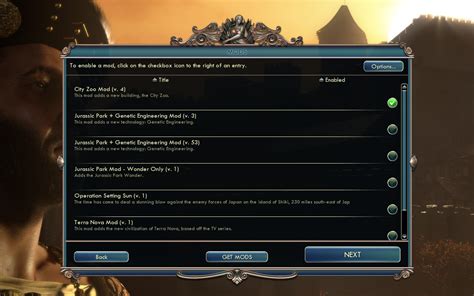

How can a gaming community moderate mod submissions for quality & safety effectively?

The Crucial Role of Mod Moderation

User-generated content, particularly in the form of modifications (mods), is a cornerstone of many vibrant gaming communities. Mods extend gameplay, add new features, and keep games fresh for years. However, this open-ended creativity comes with a significant responsibility: ensuring that all submitted mods meet standards for quality, functionality, and, most importantly, player safety. Without effective moderation, a community risks fragmentation, security breaches, and a tarnished reputation. The challenge lies in balancing creative freedom with the need for a curated, secure environment.

Challenges in Mod Submission Management

Moderating mod submissions is far from simple. Communities face a multitude of hurdles, including the sheer volume of submissions, the technical complexity of different mods, and the subjective nature of “quality.” Malicious content, such as malware or inappropriate material, poses a constant threat, requiring vigilant scanning and rapid response. Furthermore, maintaining consistent standards across a diverse global community, often with volunteer moderators, adds another layer of complexity. The evolving nature of games and modding tools also means that moderation guidelines and techniques must continuously adapt.

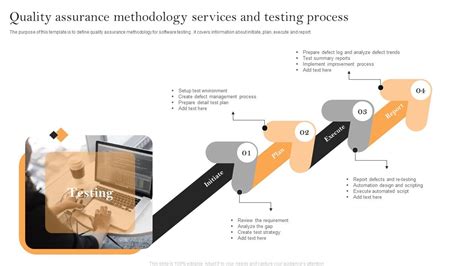

Strategies for Quality Control

To ensure high-quality mod experiences, communities should implement a structured approach. This begins with clear, publicly accessible guidelines outlining what is expected in terms of functionality, stability, performance, and originality. Submissions should ideally undergo a testing phase, whether automated for technical checks or manual for gameplay evaluation. Peer review systems, where trusted community members or even other modders evaluate submissions, can also be invaluable. Version control and update tracking are also essential to ensure that previously approved mods remain functional and safe after game updates.

- Clear & Comprehensive Guidelines: Publish detailed rules on technical requirements, content standards, and and submission processes.

- Staged Submission Process: Implement a system where mods go through initial review, testing, and then final approval.

- Technical Vetting: Check for compatibility, bugs, and performance issues before approval.

- Community Feedback Loops: Allow players to rate, review, and report issues with approved mods.

Ensuring Player Safety and Security

Safety is paramount. Unsafe mods can contain malware, expose personal data, or introduce inappropriate content. Communities must employ robust security measures. This includes automated scanning for known malware signatures and suspicious code, as well as manual review for content that violates community standards (e.g., hate speech, explicit material). Age ratings, content warnings, and robust reporting mechanisms empower players to contribute to safety. Transparent policies on data handling and privacy are also crucial for mods that interact with user data or online services.

Leveraging Community Power

No moderation team, paid or volunteer, can manage the scale of modern modding communities alone. Empowering the community is key. This can be achieved through: establishing a dedicated team of trusted volunteer moderators; implementing user-driven rating and review systems that highlight high-quality and safe mods; and creating accessible reporting tools for players to flag problematic content. Fostering a culture of accountability and positive contribution encourages modders to self-regulate and assists in identifying issues more rapidly.

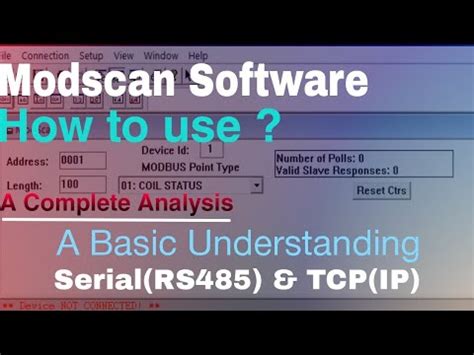

Tools and Technology for Scalable Moderation

As modding communities grow, manual moderation becomes unsustainable. Leveraging technology is essential. Automated tools can scan mod files for malicious code, check for conflicts with existing game files, and even perform basic content analysis. Dedicated modding platforms often come equipped with built-in moderation tools, submission queues, and version management. Implementing AI or machine learning algorithms could further assist in identifying patterns of problematic submissions, though human oversight remains critical for nuanced decisions.

Best Practices for Continuous Improvement

Effective moderation is an ongoing process. Communities should regularly review their guidelines and processes, adapting them based on feedback, new threats, and evolving community norms. Transparency about moderation decisions, even when difficult, builds trust. Regular communication with modders about changes in policy or technical requirements helps them stay compliant. Investing in training for volunteer moderators and providing clear escalation paths for complex cases ensures consistent and fair application of rules.

Conclusion

Moderating mod submissions for quality and safety is a complex but vital task for any gaming community. It demands a multi-pronged approach that integrates clear guidelines, robust technical checks, active community involvement, and the strategic use of automated tools. By fostering a collaborative environment where modders, players, and moderators work together, communities can continue to enjoy the boundless creativity of user-generated content while maintaining a safe, high-quality, and thriving ecosystem for everyone.