How do Discord mods handle persistent trolls in a large gaming server?

The Ever-Present Challenge of Online Trolling

Large gaming Discord servers are vibrant hubs of activity, connecting players from around the globe. However, this bustling environment also makes them prime targets for persistent trolls whose sole aim is to disrupt, annoy, or provoke. Managing these individuals is a significant challenge for moderation teams, as the sheer volume of users, diverse personalities, and the often passionate or competitive nature of gaming can inadvertently foster disruptive behavior. Persistent trolls pose a significant threat to a server’s health, member retention, and overall enjoyment.

Establishing a Robust Foundation: Proactive Strategies

Before reactive measures even come into play, a strong proactive foundation is crucial. This begins with establishing clear, well-communicated server rules that outline acceptable behavior, content standards, and the consequences of violations. Effective onboarding processes, such as dedicated welcome channels, mandatory rule acknowledgments, and easily accessible community guidelines, help set expectations for new members from the outset. Furthermore, fostering a positive, inclusive community culture actively discourages trolling by making it less tolerated by the general membership.

Regular community engagement and transparent moderation practices are also vital. When members understand why rules exist and how they are consistently enforced, they are more likely to trust and support the moderation team, and crucially, they become more proactive in reporting problematic behavior themselves.

The Escalation Ladder: Reactive Moderation Tools

When a troll inevitably emerges, moderation teams typically follow a structured escalation ladder. Initial responses often involve warnings, which can be public in the channel or private messages to the user, reminding them of specific rules they’ve violated. For continued minor disruption, a temporary ‘mute’ might be applied, preventing the user from sending messages or joining voice channels for a defined period, allowing them to cool down or reflect on their actions.

If the disruptive behavior persists after warnings and mutes, more severe actions are taken. A ‘kick’ temporarily removes a user from the server, requiring them to rejoin via an invite link. This often serves as a strong final warning. For truly persistent, malicious, or severely rule-breaking trolls, a permanent ‘ban’ is the ultimate measure. This action prevents the user from ever rejoining the server. Such decisions are usually made after careful consideration, often involving consultation among multiple moderators and review of relevant evidence.

Leveraging Technology: Bots and Tools

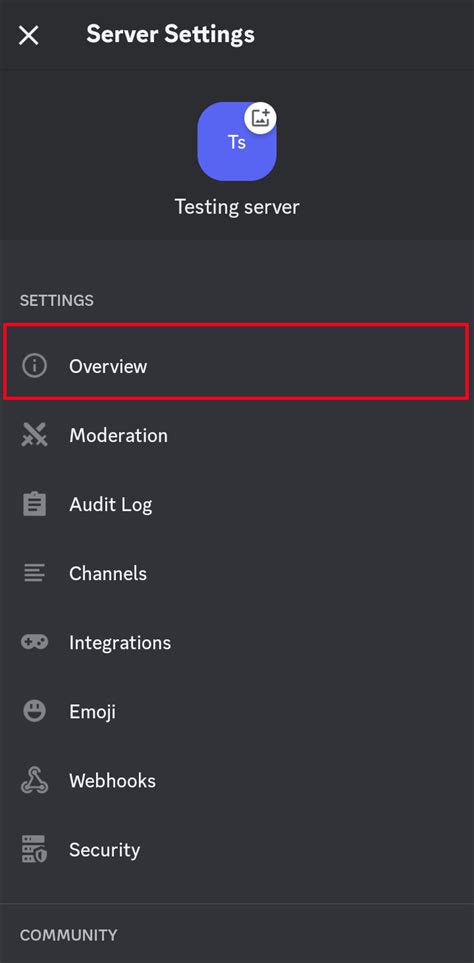

Modern Discord moderation relies heavily on a sophisticated suite of bots and administrative tools. Bots like MEE6, Dyno, or Carl-bot are indispensable, capable of automating many tasks such as filtering spam, detecting common slurs or hate speech keywords, and issuing automated warnings. These bots also play a critical role in logging all moderation actions, creating a comprehensive audit trail that is invaluable for reviewing past incidents, ensuring consistency in enforcement, and resolving disputes.

Beyond bots, Discord’s native features, including detailed audit logs, flexible role management, and built-in reporting systems, are essential. Members can report rule violations directly to moderators, providing context and evidence, which is crucial for informed decision-making. Custom roles allow moderators to precisely control user permissions, dictating who can access specific channels, send messages, or use particular features, thus segmenting the community and managing potential disruption more effectively.

The Human Element: Teamwork and Well-being

Behind every successful moderation strategy is a dedicated team of human moderators. Effective communication among the mod team is paramount, often facilitated through private mod-only channels where incidents can be discussed, evidence reviewed, and decisions collectively made. Consistency in rule enforcement across all moderators is key to maintaining fairness and preventing confusion or resentment within the community.

It’s also important to acknowledge that moderating can be a stressful, emotionally draining, and often thankless job. Server owners and head moderators must prioritize the well-being of their team, offering support, clear guidelines, and a path for debriefing after particularly challenging or high-stress incidents. A burnt-out or unsupported moderation team is an ineffective one, ultimately jeopardizing the health of the entire server.

Conclusion

Handling persistent trolls in large gaming Discord servers is an ongoing, multifaceted battle that requires a careful blend of proactive community building, decisive reactive enforcement, sophisticated technological assistance, and strong team coordination. By implementing robust strategies, fostering a positive community culture, and providing unwavering support to their moderation teams, server owners can ensure their communities remain vibrant, safe, and enjoyable spaces for all members, free from the pervasive shadow of disruptive behavior.