Best methods to objectively score a game’s replayability?

The Elusive Metric: Objectively Scoring Replayability

Replayability is often cited as a cornerstone of a game’s long-term value, yet it remains one of the most subjective metrics in game reviews. A game can be lauded for its intricate story, stunning graphics, or innovative mechanics, but if it doesn’t offer a compelling reason to return after the credits roll, its overall value proposition diminishes. The challenge for reviewers lies in moving beyond personal enjoyment to establish a systematic, objective framework for assessing how much a game genuinely encourages repeated play. How can we quantify something that feels so inherently personal?

Deconstructing Replayability: Core Components

To objectively score replayability, we must first break it down into its constituent elements. These are the factors that intrinsically motivate players to revisit a game, offering new experiences or deeper engagement with each playthrough. Identifying these elements allows for a more granular assessment.

- Player Choice & Consequence: Games with branching narratives, multiple endings, or significant player-driven decisions naturally invite replays to explore alternative paths.

- Procedural Generation & Randomization: Elements like randomly generated maps, loot, enemy placements, or event sequences ensure that no two playthroughs are identical.

- Content Volume & Variety: This includes unlockable characters, classes, weapons, different game modes (e.g., New Game+, challenge modes, multiplayer), and secret areas.

- Skill Ceiling & Mastery: Games with deep mechanics that reward continuous practice and improvement, often found in competitive titles or games with demanding difficulty levels.

- Emergent Gameplay: Systems that allow for unforeseen interactions and unique, unscripted moments driven by player agency and game mechanics.

- Community & Multiplayer Aspects: Ongoing competitive or cooperative experiences, social hubs, and player-created content extend a game’s life indefinitely.

Quantifiable Metrics for Assessment

While some factors like narrative choice can be difficult to ‘score’ numerically, we can create metrics to evaluate their presence and depth. For instance:

1. Branching Factor & Decision Impact

Evaluate the number of significant narrative branches, the permutations of endings, and how much player choices truly alter the gameplay experience. A simple ‘good/bad’ ending isn’t as impactful as choices that redefine character relationships or access entirely new regions. A rubric could assign points based on the number of unique narrative paths and their divergence points.

2. Randomization & Procedural Depth

For games relying on procedural generation, assess the *variety* and *impact* of the random elements. Is it just random enemy spawns, or does it genuinely alter level layouts, objectives, and available resources? A scoring system could look at the number of randomized variables and their influence on core gameplay.

3. Content Unlockables & Modes

This is one of the more straightforward metrics. Count the number of distinct game modes, unlockable characters/classes, challenges, or New Game+ iterations. Points can be assigned based on the sheer volume and the substantiality of the new content offered.

4. Skill Ceiling & Mastery Curve

For games focused on skill, assess the depth of the mechanics. Are there advanced techniques to learn? Are leaderboards present? Does the game effectively communicate opportunities for improvement? Reviewers can gauge the learning curve and the longevity of mastering the game’s systems.

Methodologies for Objective Scoring

To apply these metrics consistently, reviewers can employ several methodologies:

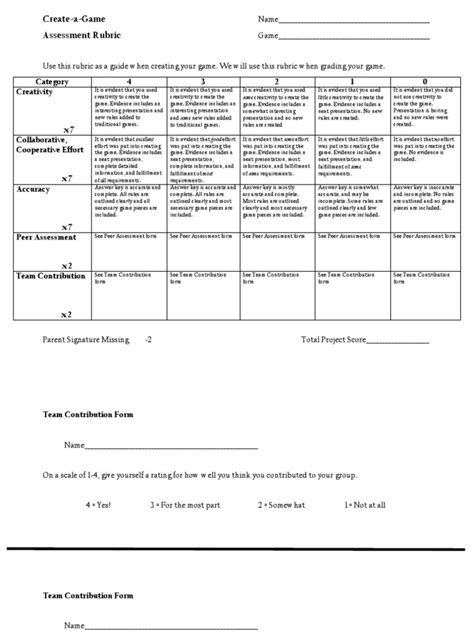

1. The Structured Replayability Rubric

Develop a standardized rubric where each identified component of replayability is given a weighted score. For example, ‘Procedural Generation’ might be worth 20% of the total replayability score, ‘Player Choice’ 25%, ‘Content Volume’ 20%, ‘Skill Ceiling’ 20%, and ‘Multiplayer/Community’ 15%. Each category would then have sub-criteria with numerical values (e.g., ‘1-3 unique endings’ = X points, ‘4+ unique endings’ = Y points). This ensures consistency across different reviews and reviewers.

2. Playtime & Engagement Observation (where applicable)

While not always feasible for initial reviews, observing community playtime metrics (e.g., Steam data, aggregated console data) for long-term reviews can provide quantitative insight into how many hours players are actually dedicating to the game post-completion. This moves beyond theoretical replayability to actual player engagement. For a single reviewer, observing their own post-review playtime can be a useful qualitative input for a structured score.

3. Comparative Analysis

Compare the game’s replayability features against industry standards or other titles within its genre known for high replayability. Does it offer more, less, or different avenues for replay compared to its peers? This provides context for the score.

Conclusion: Towards a More Transparent Replayability Score

While the ‘fun factor’ of replayability will always retain a subjective element, adopting structured methodologies can significantly enhance the objectivity and transparency of a review score. By deconstructing replayability into its core components, defining quantifiable metrics, and employing a consistent rubric, reviewers can provide a more defensible and informative assessment. This approach helps readers understand not just *if* a game is replayable, but *why* and to what extent, allowing them to make more informed purchasing decisions based on their own preferences for long-term engagement.