Best practices for moderating toxic comments on popular game mod pages?

Game modding communities are vibrant hubs of creativity and collaboration, but like any online space, they can be susceptible to toxicity. Unchecked, negative comments can drive away creators, discourage new users, and erode the positive spirit that defines these communities. Establishing robust moderation practices is not just about enforcing rules; it’s about nurturing a welcoming environment for everyone.

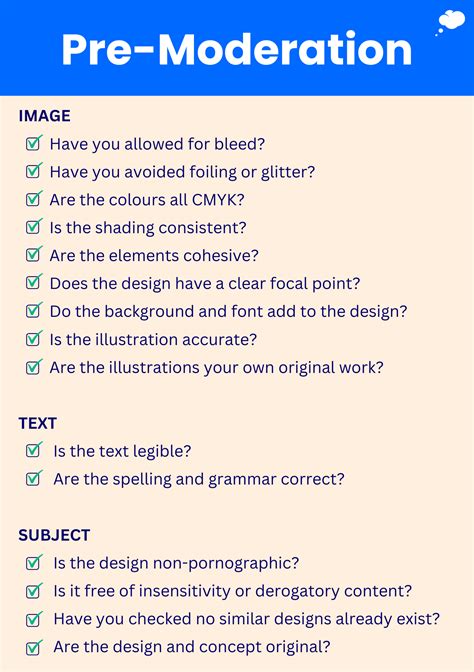

Establishing Clear and Comprehensive Community Guidelines

The foundation of any successful moderation strategy is a clearly defined set of community guidelines. These rules should outline what constitutes acceptable and unacceptable behavior, language, and content. Be specific about what kind of toxicity (e.g., hate speech, personal attacks, spam, harassment) will not be tolerated. Make these guidelines easily accessible on every mod page and community forum, ensuring users are aware of the expectations before they engage.

Leveraging Automated Tools for Proactive Filtering

Manual moderation alone can be overwhelming, especially on popular mod pages with high traffic. Implementing automated tools and AI-powered filters can significantly help. These tools can detect and flag common keywords, phrases, and patterns associated with hate speech, spam, or disruptive behavior, preventing them from appearing publicly or placing them into a queue for moderator review. While not perfect, they act as a vital first line of defense, reducing the volume of content human moderators need to sift through.

It’s crucial to regularly review and update these filters to adapt to evolving slang and new forms of abuse. False positives should also be anticipated and managed, providing users with a clear appeal process if their comments are wrongly flagged.

Empowering and Supporting Human Moderators

Automated tools are powerful, but human judgment is indispensable. A dedicated and well-supported team of human moderators is essential for handling nuanced situations, understanding context, and making fair decisions. These moderators, whether volunteers or paid staff, need clear training on the guidelines, conflict resolution, and the tools at their disposal. Providing them with resources, peer support, and avenues to de-stress is vital to prevent burnout.

Fostering a Culture of Reporting

Beyond proactive measures, an effective reactive reporting system empowers the community to participate in moderation. Users should have an easy, anonymous way to report comments that violate guidelines. Ensure these reports are reviewed promptly and that appropriate action is taken. While users don’t need to know the specific outcome of every report, transparent communication about the moderation process can build trust.

Consistency, Transparency, and Education in Enforcement

Consistency is key to fair moderation. Applying rules uniformly across all users and situations builds trust and ensures that the guidelines are taken seriously. When action is taken, be transparent about why a comment was removed or an account penalized, referencing the specific guideline violated. This educates users on what is unacceptable and can deter future infractions. Instead of immediate bans for minor offenses, consider a tiered approach: warnings for first-time or less severe infractions, followed by temporary suspensions, and finally permanent bans for repeat or severe violations.

Promoting Positive Engagement and Community Building

Moderation isn’t just about deleting negative content; it’s also about fostering positive interactions. Encourage and highlight constructive comments, helpful feedback, and respectful discussions. Moderators can actively engage in positive ways, thanking users for helpful contributions, answering questions, and generally setting a welcoming tone. Creating channels for constructive feedback for mod creators can also divert some potentially toxic criticism into more productive avenues.

Regular Review and Adaptation

The online landscape and community dynamics are constantly evolving. Moderation strategies should not be static. Regularly review your guidelines, moderation tools, and team performance. Solicit feedback from both moderators and the wider community to identify pain points and areas for improvement. Adapting to new challenges, such as emerging forms of toxicity or changes in community demographics, ensures your moderation practices remain effective and relevant.

Ultimately, creating a healthy environment on game mod pages requires a thoughtful, multi-pronged approach. By combining clear guidelines, automated assistance, dedicated human oversight, consistent enforcement, and a focus on positive community building, mod platforms can protect their creators, foster innovation, and ensure a welcoming space for all users.