Best practices for moderating toxic game mod communities & user content?

Game modding has revolutionized the gaming experience, empowering players to extend gameplay, create new content, and foster vibrant communities. However, with this freedom comes the significant challenge of managing toxicity within mod communities and user-generated content. Unchecked negativity can drive away creators, users, and ultimately, harm the game’s ecosystem. Effective moderation is crucial for cultivating a safe, inclusive, and thriving environment.

Establishing Clear, Enforceable Guidelines

The foundation of any successful moderation strategy is a comprehensive set of clear, concise, and easily accessible community guidelines. These rules should explicitly define what constitutes acceptable and unacceptable behavior, content standards for mods themselves (e.g., no hate speech, illegal content, or harassment), and the consequences for violations. It’s vital that these guidelines are not just published but also regularly reviewed, updated, and communicated to the community.

- Be Specific: Vague rules are open to misinterpretation. Provide examples of prohibited content or behavior.

- Focus on Impact: Frame rules around the impact of actions on other users, rather than just subjective “offense.”

- Accessibility: Make rules easy to find, understand, and available in multiple languages if applicable.

Empowering and Training Community Moderators

A dedicated and well-trained team of moderators is the backbone of effective community management. These can be volunteer community members or paid staff, but consistent training is paramount. They need a deep understanding of the guidelines, access to necessary tools, and the authority to act decisively. Empowering moderators also means providing them with support, managing burnout, and having clear escalation paths for complex issues.

- Thorough Vetting: Carefully select moderators based on their judgment, temperament, and understanding of community values.

- Ongoing Training: Educate moderators on new tactics of toxicity, platform updates, and de-escalation techniques.

- Tools and Support: Equip them with efficient reporting systems, moderation dashboards, and a private channel for team communication and support.

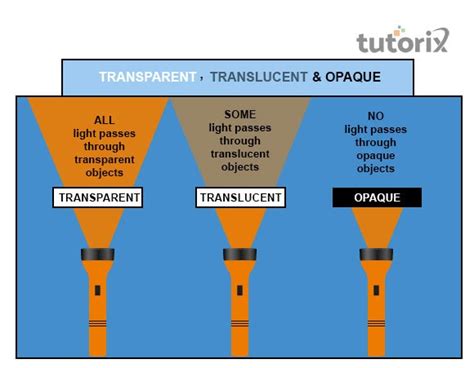

Leveraging Technology for Scale and Efficiency

Manual moderation alone cannot keep pace with large, active communities. Integrating technological solutions is essential for identifying, triaging, and acting on problematic content and behavior at scale. This includes automated filters, AI-powered content analysis, and robust user reporting systems.

- Automated Filters: Implement keyword filters, image recognition, and spam detection to catch obvious violations before they impact users.

- Robust Reporting Systems: Make it easy for users to report issues and provide detailed context. Ensure reports are reviewed promptly.

- AI-Assisted Moderation: Utilize machine learning to flag potentially harmful content or behavior for human review, increasing efficiency and consistency.

Transparent Enforcement and Communication

Consistency and transparency in moderation build trust within the community. When actions are taken, users should understand why, especially in cases of warnings, suspensions, or bans. Clear communication about policy changes, moderation decisions (without doxxing individuals), and the overall moderation philosophy helps users understand expectations and fosters a sense of fairness.

- Consistent Application: Apply rules fairly and consistently across all users and content.

- Feedback Loops: Provide channels for users to appeal decisions and offer feedback on moderation processes.

- Public Communication: Regularly update the community on moderation efforts, significant policy changes, and safety initiatives.

Fostering a Culture of Positive Engagement

Moderation isn’t just about punishment; it’s also about promoting and rewarding positive behavior. Creating avenues for positive interaction, highlighting exemplary mod creators, and encouraging constructive feedback can significantly shift the community’s overall tone. Gamification of positive contributions or community recognition programs can incentivize good digital citizenship.

- Highlight Positive Contributions: Feature well-behaved users and high-quality, guideline-compliant mods.

- Community Events: Organize events that encourage collaborative and constructive engagement.

- Educational Resources: Provide resources on best practices for online etiquette and safe content creation.

Conclusion

Moderating toxic game mod communities and user content requires a multi-faceted and ongoing commitment. By establishing clear guidelines, empowering skilled moderators, leveraging technology, practicing transparent enforcement, and actively fostering positive engagement, platforms can create resilient and welcoming environments. This ensures that the creativity and collaborative spirit of game modding can thrive without being overshadowed by negativity, ultimately benefiting both creators and players.