Effective strategies for gaming mods to combat toxicity and foster positive interaction?

The Critical Role of Gaming Moderators in Community Health

In the expansive and dynamic world of online gaming, moderators serve as the unsung heroes, diligently working to maintain order and ensure a fair, enjoyable experience for all players. However, their role extends far beyond merely enforcing rules; it encompasses the vital task of actively combating toxicity and cultivating environments where positive interaction can flourish. The prevalence of harassment, hate speech, and disruptive behavior can quickly sour a gaming community, driving away players and damaging a game’s reputation. Therefore, understanding and implementing effective strategies is paramount for any moderation team dedicated to the well-being of their player base.

Addressing toxicity isn’t a simple task; it requires a multi-faceted approach that combines vigilance, empathy, and strategic action. Moderators are often on the front lines, witnessing the best and worst of online human behavior. Their ability to de-escalate conflicts, enforce consistent standards, and inspire positive engagement is central to transforming potentially hostile spaces into thriving communities where players feel safe, respected, and eager to participate.

Proactive Moderation and Clear, Consistent Guidelines

One of the most effective strategies for combating toxicity begins with proactive moderation. This involves not just reacting to incidents but actively monitoring chat logs, forums, and in-game interactions to identify potential issues before they escalate. Early intervention can prevent minor disagreements from spiraling into full-blown flame wars or targeted harassment. Establishing a visible moderator presence demonstrates to the community that behavior is being monitored and that disruptive actions will not go unnoticed.

Complementing proactive monitoring are clear, concise, and consistently enforced community guidelines. A well-articulated code of conduct leaves no room for ambiguity regarding acceptable and unacceptable behavior. These guidelines should be easily accessible to all players and cover a range of issues, from respectful communication to zero tolerance for hate speech, harassment, and discrimination. Crucially, moderators must apply these rules consistently across all users, regardless of their status or influence within the community. Inconsistency erodes trust and can breed resentment, making the moderation effort seem arbitrary or unfair.

Fostering Positive Interaction and Empowering the Community

Combating toxicity isn’t solely about punishment; it’s equally about nurturing positive interactions. Moderators can actively foster a healthier environment by promoting and rewarding good behavior. This could include spotlighting helpful players, organizing community-driven events that encourage teamwork, or creating dedicated channels for positive feedback and support. By recognizing and celebrating constructive contributions, moderators can shift the community’s focus towards cooperation and mutual respect.

Empowering the community to be part of the solution is another powerful strategy. Implementing robust reporting tools that are easy to use and clearly explained can turn every player into an additional set of eyes. However, it’s vital that reports are handled swiftly and transparently, with feedback provided where appropriate (without revealing personal details) to show that their contributions are valued. Furthermore, identifying and elevating positive community leaders or long-standing, respected players to ambassadorial roles can create a ripple effect of good behavior, as these individuals can set examples and help guide newcomers.

Leveraging Technology and Data for Enhanced Moderation

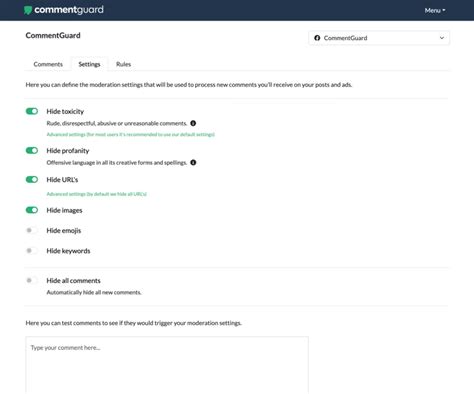

In large or rapidly growing communities, manual moderation alone can be overwhelming. Leveraging technology and data analysis becomes essential. Automated moderation tools, often powered by AI and machine learning, can filter out common offensive language, spam, or even detect behavioral patterns indicative of toxicity before a human moderator intervenes. While AI should never fully replace human judgment, it can significantly reduce the workload, allowing human moderators to focus on more complex cases requiring nuanced understanding.

Beyond automated filters, collecting and analyzing data on reported incidents, types of toxicity, peak times, and recurring offenders can provide invaluable insights. This data can inform policy adjustments, identify specific areas of concern within the game or community, and help allocate moderation resources more effectively. Understanding trends allows for a more strategic and adaptive approach to combating evolving forms of toxicity, ensuring that moderation efforts remain relevant and potent.

Leading by Example and Continuous Learning

Ultimately, the effectiveness of any moderation strategy hinges on the moderators themselves. They must lead by example, maintaining professionalism, impartiality, and empathy in all interactions. A moderator’s calm and respectful demeanor, even when dealing with highly agitated or offensive players, sets a standard for the entire community. Training and ongoing support for moderation teams are critical; this includes not only understanding the rules but also developing strong communication skills, conflict resolution techniques, and an awareness of self-care to prevent burnout.

The online landscape is constantly evolving, and so too are the forms and methods of toxicity. Therefore, continuous learning and adaptability are key. Moderation teams should regularly review their strategies, policies, and tools, staying informed about new threats and best practices in community management. Engaging in open dialogue with the community (where appropriate) about moderation challenges and changes can also foster a sense of shared responsibility and collaboration in maintaining a healthy environment.

Building a Better Gaming Ecosystem Through Thoughtful Moderation

Combating toxicity and fostering positive interaction in gaming communities is an ongoing, dynamic process that requires dedication, strategy, and a collaborative spirit. By adopting proactive measures, establishing clear and consistent guidelines, empowering positive community members, leveraging technological tools, and upholding strong leadership, gaming moderators can transform their communities. These efforts do more than just enforce rules; they cultivate a culture of respect, enjoyment, and belonging that enriches the gaming experience for everyone.

A thriving, positive gaming community is a testament to the hard work of its moderation team. It creates a space where players can truly connect, compete, and collaborate without fear of harassment, ultimately leading to a more vibrant and sustainable gaming ecosystem for developers and players alike.