Gaming mods: How to tackle toxicity & misinformation without stifling community engagement?

Gaming communities thrive on interaction, shared experiences, and user-generated content, often facilitated and enhanced by mods. However, this very openness, while fostering unparalleled creativity and engagement, also presents a significant challenge: the proliferation of toxicity and misinformation. For community managers and platform owners, the delicate balance lies in effectively addressing these negative elements without stifling the vibrant, free-flowing communication that makes these communities so compelling.

The Dual Nature of Open Gaming Platforms

The ability for players to create, share, and modify game content has revolutionized the gaming landscape, leading to richer experiences and stronger communities. From elaborate cosmetic overhauls to entirely new game mechanics, mods embody the collective passion and ingenuity of players. Yet, this decentralized creativity also means less direct control, making platforms susceptible to harmful content, abusive language, and the spread of false narratives that can erode trust and drive players away.

Identifying and Understanding the Threats

Toxicity in gaming ranges from casual harassment and cyberbullying to hate speech, doxxing, and targeted abuse. It creates an unwelcoming environment that can deter new players and alienate existing ones. Misinformation, on the other hand, can manifest as false claims about game mechanics, cheat methods, security vulnerabilities, or even broader societal issues amplified within gaming spaces. Both can severely damage community health, impact player retention, and even pose reputational risks to game developers and publishers.

Strategic Approaches to Moderation

1. Clear and Enforceable Guidelines

The foundation of any healthy community is a set of transparent, easily accessible, and consistently enforced rules. These guidelines should explicitly define what constitutes unacceptable behavior and misinformation, covering various aspects from language to content sharing. Regular communication about these rules and the consequences of breaking them is crucial.

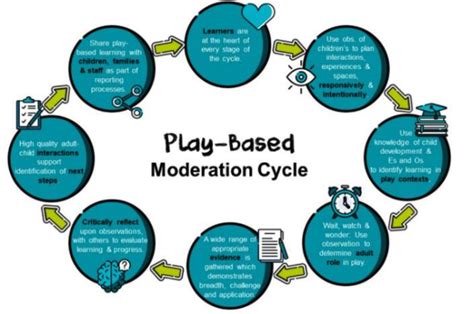

2. Leveraging Technology and Human Oversight

Effective moderation often requires a blend of automated tools and human intervention. AI-powered filters can flag suspicious content or language for review, helping to manage scale. However, human moderators provide the nuanced understanding and contextual judgment necessary to differentiate between genuine toxicity and innocent banter, ensuring fair and accurate decisions.

![How to Create Community Guidelines [+Template]](/images/aHR0cHM6Ly90czIubW0uYmluZy5uZXQvdGg/aWQ9T0lQLnNpaDhZMURqV2hzOWMzT1Y0Q3dVSWdIYUhhJnBpZD0xNS4x.webp)

3. Empowering the Community

Players themselves can be a powerful force in moderation. Implementing robust reporting tools and encouraging users to flag inappropriate content allows communities to self-police effectively. Rewarding positive contributions and fostering a culture where helpfulness and respect are celebrated can also organically reduce negative behaviors.

4. Education and Critical Thinking

Instead of just reacting to misinformation, platforms can proactively educate players on how to critically evaluate information, identify reliable sources, and understand the potential impact of spreading unverified content. This fosters a more discerning community less prone to falling for or spreading false narratives.

The Delicate Balance: Engagement vs. Control

The core challenge is to apply these moderation strategies without creating a sterile, over-regulated environment that discourages genuine interaction and creativity. Over-moderation can lead to a chilling effect, where players become hesitant to express themselves for fear of arbitrary punishment. The goal is not to eliminate all conflict or dissent, but to channel it constructively and prevent it from devolving into abuse or harmful misinformation.

The Role of Mods in a Healthy Ecosystem

Ironically, mods themselves can sometimes be part of the solution. Modding communities often develop their own self-regulatory mechanisms. Furthermore, official modding platforms can implement specific policies for user-generated content, requiring creators to adhere to community standards. Encouraging mods that enhance positive interaction, provide clearer information, or improve reporting tools can further contribute to a safer environment.

Conclusion

Tackling toxicity and misinformation in gaming communities is an ongoing, multifaceted endeavor. It requires a commitment to clear rules, a blend of technological and human moderation, and crucially, the active participation and education of the community itself. By carefully navigating this complex landscape, platforms can maintain the vibrant, engaging spaces that gamers love, ensuring that creativity and connection flourish without being overshadowed by negativity.