How can game communities effectively moderate mod users & prevent toxicity?

The Dual Nature of Modding: Creativity and Challenge

Modding has long been a vibrant cornerstone of many gaming communities, extending the life of games, fostering creativity, and providing players with unique experiences. From minor graphical tweaks to expansive new storylines, user-generated content (UGC) enriches the gaming landscape immensely. However, this freedom also brings significant challenges. Unmoderated or poorly moderated modding can introduce game-breaking bugs, security vulnerabilities, inappropriate content, and, crucially, foster environments rife with toxicity and harassment among users.

For game communities to truly thrive with mod integration, effective moderation of mod users is not just beneficial; it’s essential. It requires a delicate balance of empowering creators while safeguarding the community’s well-being and the game’s integrity. The goal is to cultivate a space where creativity flourishes responsibly, and all participants feel respected and secure.

Establishing a Strong Foundation: Clear Guidelines and Expectations

The first step towards effective moderation is the establishment of comprehensive, clear, and easily accessible guidelines. A well-defined Code of Conduct (CoC) should explicitly outline acceptable behavior for mod creators and users alike. This includes rules regarding content appropriateness (e.g., no hate speech, pornography, or illegal content), technical standards (e.g., no malware, exploits, or severe instability), and community interactions (e.g., no harassment, bullying, or doxxing).

These guidelines should be prominent on all platforms where mods are shared or discussed, such as official forums, Discord servers, and mod repositories. Regular communication about these rules, perhaps through blog posts or announcements, ensures that the community is consistently aware of what is expected of them. Ambiguity breeds confusion, which in turn can lead to unintended infractions or, worse, deliberate exploitation of loopholes.

Empowering the Community: Active Moderation and Reporting Systems

While clear rules form the backbone, their enforcement relies heavily on active moderation. Community-driven moderation, often involving dedicated volunteers, can be incredibly effective. These moderators, typically passionate members of the community themselves, can monitor discussions, review reported content, and act as a first line of defense against toxicity. Providing them with proper training, tools, and support is crucial to ensure consistency and prevent burnout.

Robust Reporting Mechanisms

An intuitive and efficient reporting system is equally vital. Users should be able to easily report problematic mods or toxic behavior without fear of reprisal. This system needs to be transparent, allowing users to understand that their reports are being reviewed and acted upon, even if the specific outcome isn’t always shared for privacy reasons. Prompt investigation and consistent action on reports build trust within the community and discourage bad actors.

Technical Safeguards and Developer Involvement

Beyond human moderation, technical solutions play a critical role, especially concerning the mods themselves. Developers of games with robust modding scenes can implement several features:

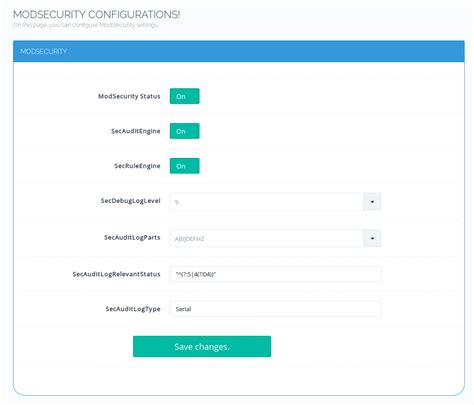

- Automated Scanning: Employing tools to scan uploaded mods for known malware, viruses, or exploits before they are made public.

- Mod Sandboxing: Running mods in isolated environments to limit their potential impact on the base game or user’s system.

- API Restrictions: Limiting the capabilities of mod APIs to prevent malicious code from accessing sensitive system information or performing unintended actions.

- Version Control & Compatibility Checks: Ensuring mods are compatible with current game versions and flagging those that might cause instability.

Close collaboration between game developers and leading modders can also foster a healthier ecosystem. Developers can provide official tools, documentation, and even support channels for modders, which helps standardize mod quality and adherence to safety protocols.

Fostering a Culture of Respect and Positive Reinforcement

Ultimately, preventing toxicity isn’t just about punishment; it’s about cultivating a positive culture. This involves actively promoting and celebrating good behavior and high-quality, non-toxic mods. Showcasing exemplary mod creators and their work can inspire others to contribute positively. Regular community events, challenges, or even developer spotlights on user creations can reinforce a sense of shared purpose and pride.

Educational initiatives, such as tutorials on safe modding practices or discussions on the impact of online behavior, can empower users to be part of the solution. Leaders within the community, including moderators, developers, and prominent content creators, should consistently model respectful and inclusive behavior, setting the tone for interactions.

Conclusion: An Ongoing Commitment to a Thriving Community

Moderating mod users and preventing toxicity is not a one-time fix but an ongoing commitment. It requires a dynamic strategy that combines clear community guidelines, active human moderation, robust technical safeguards, and a proactive approach to fostering a positive culture. By consistently adapting to new challenges, empowering both moderators and the general community, and celebrating the incredible creativity that mods bring, game communities can ensure that their modding scene remains a vibrant, welcoming, and toxic-free space for everyone.