How do gaming communities effectively moderate toxic users in modding forums?

The Challenge of Toxicity in Modding Forums

Modding forums are vibrant hubs of creativity and collaboration, essential to extending the life and enjoyment of countless video games. They connect talented mod creators with eager players, fostering innovation and community spirit. However, like any online space, modding forums are not immune to toxicity. The passionate nature of gaming, coupled with the technical challenges and subjective opinions surrounding user-generated content, can sometimes lead to heated discussions, personal attacks, and unconstructive criticism that threaten to derail the positive environment.

Effectively moderating these spaces is crucial not just for maintaining order, but for ensuring that modders feel safe to share their work and users feel welcome to engage constructively. The challenge lies in balancing freedom of expression with the need for a respectful, inclusive atmosphere. This article explores key strategies gaming communities employ to tackle toxicity in their modding forums.

Establishing Clear Community Guidelines and Expectations

The foundation of any effective moderation strategy is a robust set of community guidelines. These rules should be clearly articulated, easily accessible, and frequently referenced. They define what constitutes acceptable and unacceptable behavior, covering aspects like:

- Respectful Communication: Prohibiting personal attacks, hate speech, harassment, and discriminatory language.

- Constructive Criticism: Encouraging feedback that is helpful and specific, rather than solely negative or derisive.

- Intellectual Property: Guidelines around using assets, crediting original creators, and avoiding plagiarism.

- Spam and Advertising: Rules against unsolicited promotions or repetitive posting.

- Reporting Misconduct: Instructions on how users can report violations.

Transparency in these rules, alongside clear consequences for infractions, empowers users to self-regulate and assists moderators in making consistent, fair decisions.

Empowering and Equipping Moderators

Moderators are the frontline defense against toxicity. Whether volunteers or paid staff, they need to be well-trained and adequately supported. Effective moderator empowerment involves:

- Comprehensive Training: Covering conflict resolution techniques, consistent application of guidelines, de-escalation tactics, and understanding the nuances of forum dynamics.

- Robust Toolsets: Providing moderators with efficient tools for reviewing reports, issuing warnings, temporary bans, permanent bans, and managing content. This includes private channels for moderator discussion and decision-making.

- Support Systems: Regular check-ins, mental health resources, and a clear escalation path for complex issues help prevent moderator burnout. Recognition for their often-thankless work is also vital.

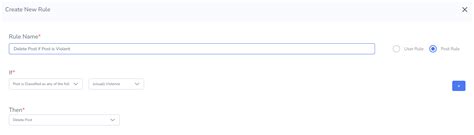

Fostering a Proactive Reporting Culture

Users are often the first to encounter toxic behavior, making an efficient reporting system paramount. Communities should:

- Simplify the Reporting Process: Make it easy and intuitive for users to flag problematic posts, comments, or users directly within the forum interface.

- Ensure Anonymity (where appropriate): Allowing users to report anonymously can encourage more reports by removing the fear of retaliation.

- Provide Feedback: While not always possible to disclose specifics, letting users know their reports have been received and acted upon (e.g., via a notification or a change in post status) builds trust in the moderation system.

- Educate Users: Teach the community what to report and how to do it effectively, emphasizing quality over quantity in reports.

Implementing Escalation and Consequence Systems

A consistent and fair system of consequences is crucial for deterring repeat offenders. This typically involves a tiered approach:

- Warnings: For minor infractions, a private or public warning provides an opportunity for the user to correct their behavior.

- Temporary Bans: For repeated minor offenses or moderate violations, a temporary suspension (e.g., 24 hours to a week) serves as a stronger deterrent.

- Permanent Bans: Reserved for severe violations (e.g., hate speech, severe harassment, illegal content) or persistent disregard of rules after multiple warnings and temporary bans.

Many communities also implement an appeal process, allowing banned users to explain their actions and potentially seek reinstatement, fostering a sense of fairness.

Leveraging Technology and Automation

While human moderation is indispensable, technology can significantly aid in scaling efforts and identifying issues quickly:

- Keyword Filters: Automated filters can flag or remove posts containing hate speech, slurs, or spam keywords.

- Spam Detection Algorithms: Tools that identify and automatically remove bot-generated spam.

- AI-Powered Moderation: Advanced AI can analyze context, sentiment, and user behavior to identify potentially toxic interactions, escalating them for human review.

- Bots for FAQs and Reminders: Automated responses can guide users to guidelines, answer common questions, and provide immediate, polite reminders about forum rules.

These tools act as a first line of defense, freeing up human moderators to focus on more nuanced and complex cases.

Promoting Positive Engagement and Community Building

Beyond simply reacting to toxicity, fostering a positive culture proactively prevents it. Strategies include:

- Highlighting Good Behavior: Recognizing users who contribute positively, offer helpful advice, or adhere to guidelines sets a good example.

- Organizing Community Events: Mod showcases, contests, or Q&A sessions with modders can strengthen bonds and redirect focus to creative output.

- Creating Safe Spaces for Feedback: Dedicated sections for constructive criticism or bug reports can funnel potentially negative feedback into productive channels.

- Mentorship and Peer Support: Encouraging experienced users to help newcomers fosters a supportive environment.

A Continuous Effort for Healthier Modding Spaces

Moderating modding forums is not a one-time task but an ongoing commitment. It requires continuous adaptation to new forms of toxicity, evolving community needs, and technological advancements. By combining clear guidelines, empowered moderators, proactive reporting, consistent consequences, smart technology, and a focus on positive community building, gaming communities can create modding forums that remain vibrant, creative, and welcoming spaces for everyone.