How do gaming mods effectively combat toxicity without stifling community discussion?

Navigating the Delicate Balance: Moderation and Community Engagement

Online gaming communities are vibrant hubs of interaction, creativity, and shared passion. However, they can also become breeding grounds for toxicity, ranging from minor annoyances to severe harassment. The critical challenge for gaming moderators is to effectively combat this negativity without inadvertently stifling the very discussions that make these communities thrive. It’s a delicate balancing act requiring nuanced strategies, clear communication, and a deep understanding of human behavior.

The Dual Challenge: Eradicating Toxicity vs. Encouraging Discourse

Toxicity manifests in many forms: hate speech, personal attacks, spam, cheating, and disruptive behavior. Left unchecked, it drives away players, damages game reputation, and creates an unwelcoming environment. Conversely, heavy-handed or overly broad moderation can lead to a ‘chilling effect,’ where players fear expressing themselves, leading to sterile, unengaging discussions. The goal is not censorship, but curation – shaping an environment where constructive interaction is prioritized over destructive behavior.

Strategies for Effective Toxicity Combat

Clear and Accessible Guidelines

The foundation of any successful moderation strategy is a clear, concise, and easily accessible set of community guidelines or rules of conduct. These rules must explicitly define what constitutes unacceptable behavior, often with examples. Transparency around these rules ensures players understand expectations and potential consequences, reducing ambiguity and fostering a sense of fairness.

Proactive and Reactive Moderation

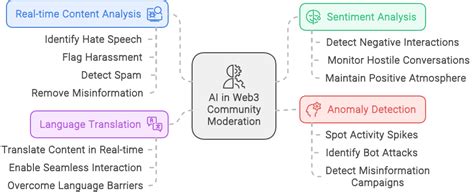

- Proactive Moderation: This involves active monitoring of chat channels, forums, and social platforms by human moderators, sometimes augmented by AI tools that flag potentially problematic language or patterns of behavior. Early intervention can prevent minor disagreements from escalating.

- Reactive Moderation: Robust reporting systems are crucial. Players should have simple, effective ways to report toxic behavior directly to moderators. This empowers the community to participate in maintaining order and provides critical data for moderators to act upon. Tiered penalties, from warnings to temporary bans to permanent exclusions, ensure consequences fit the severity and frequency of the infraction.

Nurturing Healthy Discussion and Engagement

Empowering Community Leaders and Positive Reinforcement

Beyond punishment, moderation should also focus on positive reinforcement. Recognizing and rewarding players who contribute constructively can set a positive tone. Empowering trusted members of the community, such as volunteer moderators or community leaders, can extend moderation reach and foster a sense of shared responsibility. These individuals can often de-escalate situations and guide discussions more effectively due to their standing within the community.

Creating Designated Spaces for Diverse Discussions

To prevent stifling legitimate discussion, communities can create dedicated channels or forums for specific types of discourse. For instance, a ‘feedback’ channel might be more strictly moderated for constructive criticism, while a ‘general chat’ might allow for more casual, less formal interaction. Similarly, ‘off-topic’ sections can divert conversations that, while not toxic, might derail primary discussions.

Transparency, Appeals, and Education

Moderators should strive for transparency in their decisions, especially concerning bans or significant penalties. An appeals process allows players to challenge decisions, fostering trust and demonstrating that moderation is fair, not arbitrary. Furthermore, educating the community about the ‘why’ behind certain rules and the impact of toxicity can cultivate a more empathetic and self-regulating environment over time. Explaining the nuances of digital etiquette and the importance of diverse perspectives can be a powerful tool.

The Iterative Process of Community Management

No moderation strategy is perfect or static. Gaming communities evolve, as do the tactics of toxic individuals. Therefore, effective moderation is an ongoing, iterative process. Regularly collecting feedback from the community, analyzing moderation data, and being willing to adapt rules and tools are crucial for maintaining a healthy and vibrant discussion space. This continuous refinement ensures that moderators can keep pace with new challenges while consistently upholding the values of open, respectful engagement.

Conclusion

Effectively combating toxicity without stifling community discussion is a complex but achievable goal for gaming moderators. It requires a multifaceted approach that combines clear rules, robust enforcement, proactive engagement, positive reinforcement, and a commitment to transparency. By balancing strictness with empathy, and by continuously adapting to the evolving nature of online interactions, moderators can cultivate gaming communities that are not only safe and inclusive but also dynamic, engaging, and rich with diverse perspectives.