How do mod teams filter toxic comments to find useful community bug reports?

Online communities are vibrant hubs for discussion, feedback, and engagement. For developers and project teams, they represent an invaluable resource for understanding user experience and identifying issues. However, these digital spaces can also become breeding grounds for toxicity, making the task of extracting useful, actionable information—especially bug reports—a significant challenge. Mod teams stand at the forefront of this battle, acting as crucial filters between raw community sentiment and constructive development input.

The Dual Challenge: Toxicity and Value

The sheer volume of comments across forums, social media, and dedicated platforms can be overwhelming. Within this deluge, passionate users often express frustration or excitement, which can sometimes manifest as aggressive or unhelpful commentary. Distinguishing genuine, well-observed bug reports from rants, personal attacks, or unsubstantiated complaints requires a sharp eye and robust strategies.

Many legitimate bug reports might be buried within emotional language. A user describing a “game-breaking glitch that ruins everything” could actually be highlighting a critical error, but its presentation makes it difficult to immediately recognize its value compared to a calm, structured report.

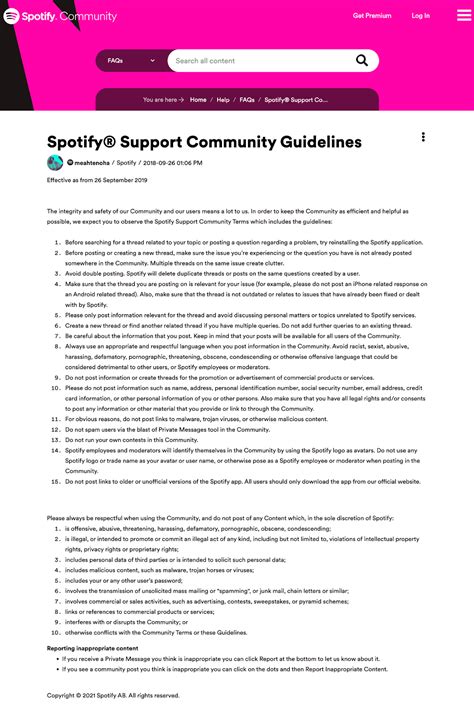

Setting Clear Guidelines and Expectations

A strong foundation for effective moderation begins with transparent and enforced community guidelines. A well-defined code of conduct helps manage expectations, outlines acceptable behavior, and provides a clear framework for users on how to report issues constructively. This includes specifying where and how bug reports should be submitted, often through dedicated channels or forms.

Educating the community on what constitutes a good bug report—detailing steps to reproduce, expected vs. actual behavior, and system information—can significantly reduce noise and improve the quality of submissions. Clear reporting mechanisms empower users to contribute effectively, making the moderators’ job easier.

Leveraging Technology: Moderation Tools and AI

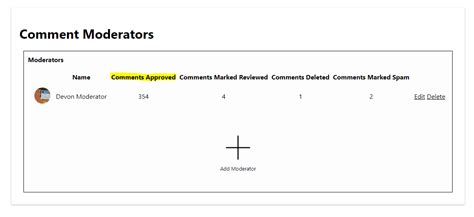

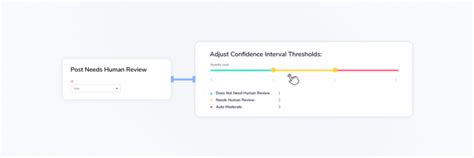

Given the scale of modern online communities, human moderation alone is often insufficient. Mod teams increasingly rely on technology to assist in filtering. Automated tools employ keyword filtering to flag potentially toxic language, hate speech, or spam. Sentiment analysis can help identify comments with strong negative connotations, directing moderators to areas requiring human review.

Advanced AI and machine learning algorithms are also being deployed to learn from past moderation decisions, automatically categorizing or even pre-filtering comments. While not perfect, these tools can significantly reduce the manual workload, allowing human moderators to focus on nuanced cases and complex reports that require human judgment.

The Human Element: Experienced Moderators

Despite technological advancements, the human element remains irreplaceable. Experienced moderators possess the contextual understanding, empathy, and critical thinking skills to interpret the intent behind a comment, even if it’s poorly worded or emotionally charged. They can distinguish genuine frustration from malicious trolling, and extract valuable information from less-than-ideal reports.

Effective human moderators are trained to look past the tone and focus on the core message. They understand the project’s intricacies, common issues, and how different user groups articulate problems. Their ability to cross-reference multiple vague reports to identify an underlying, critical bug is a testament to their expertise.

Strategies for Effective Bug Report Identification

Beyond general moderation, specific strategies enhance bug report identification. Dedicated bug reporting sections or tools, like JIRA integrations or specific forum sub-sections with required templates, force users to structure their feedback. Enforcing these templates ensures that essential information—like reproduction steps, platform, and frequency—is consistently provided.

Moderators also employ techniques like “signal boosting” well-written reports, actively requesting clarification from users on vague issues, and consolidating duplicate reports to identify common patterns and prioritize issues. Establishing a clear escalation path for verified bugs directly to development teams ensures quick action.

Feedback Loops and Community Engagement

Maintaining a positive feedback loop with the community is crucial. Acknowledging valid bug reports, even with a simple “received and forwarded,” encourages users to continue contributing constructively. When users see their efforts are recognized and lead to actual improvements, they are more likely to put effort into well-structured reports.

Conversely, explaining why certain reports might not be actionable (e.g., lack of detail, not a bug) can educate the community over time, further refining the quality of future submissions. This continuous engagement builds trust and fosters a more cooperative environment.

Ultimately, filtering toxic comments to find useful bug reports is a multi-faceted endeavor. It’s a delicate balance of strong community guidelines, sophisticated technological tools, and the irreplaceable judgment of experienced human moderators. By combining these elements, mod teams transform the often-chaotic landscape of online communities into a valuable wellspring of actionable feedback, directly contributing to the improvement and success of any project.