How to integrate crucial PC gaming performance data into reviews effectively?

The Indispensable Role of Performance Data in Modern PC Game Reviews

In the evolving landscape of PC gaming, a review that merely describes gameplay mechanics and story beats is no longer sufficient. Modern gamers, armed with diverse hardware configurations and a thirst for optimal performance, demand quantitative insights. Integrating crucial performance data into reviews effectively transforms them from subjective critiques into objective, data-backed analyses, guiding purchasing decisions and setting realistic expectations. The challenge lies not just in collecting data, but in presenting it in a way that is both comprehensive and easily digestible for a broad audience.

Selecting the Right Metrics for Comprehensive Analysis

The first step in effective data integration is identifying which metrics truly matter. While frames per second (FPS) remains a primary indicator, a holistic view requires more. Average FPS provides a general idea, but 1% low and 0.1% low FPS figures are critical for gauging frame time consistency and identifying stuttering, which can significantly impact the perceived smoothness of gameplay. Beyond raw FPS, reviewers should consider reporting frame time graphs where appropriate, especially for titles known for consistency issues. Additionally, specifying test resolutions, graphics presets (e.g., Ultra, High, Medium), and API (DirectX 12, Vulkan) is non-negotiable for replicability and comparison.

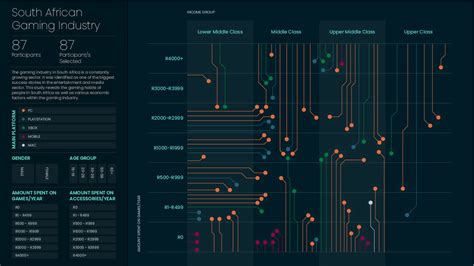

Other valuable data points include CPU utilization, GPU utilization, VRAM usage, and system latency if tools permit reliable measurement. This deep dive offers a more complete picture of how a game taxes different components of a system, helping readers identify potential bottlenecks in their own setups.

Establishing a Rigorous and Transparent Methodology

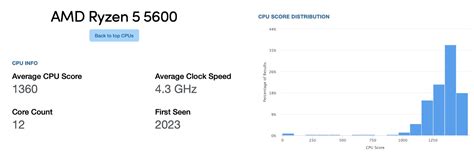

Consistency is paramount when collecting performance data. A standardized testing methodology ensures that results are comparable across different games and reviews. This involves using a consistent test bench with specific hardware (CPU, GPU, RAM), driver versions, and operating system configurations. Reviewers should explicitly state their test system specifications, including all relevant components and software versions, allowing readers to understand the context of the benchmarks. Running multiple test passes for each benchmark sequence and reporting average or median results helps mitigate anomalies.

Furthermore, defining the benchmark sequence is crucial. This could involve using in-game benchmark tools (if reliable), or meticulously designed manual run-throughs in demanding sections of the game that are repeatable. Automating the capture process where possible with tools like CapFrameX or OCAT can improve accuracy and reduce human error.

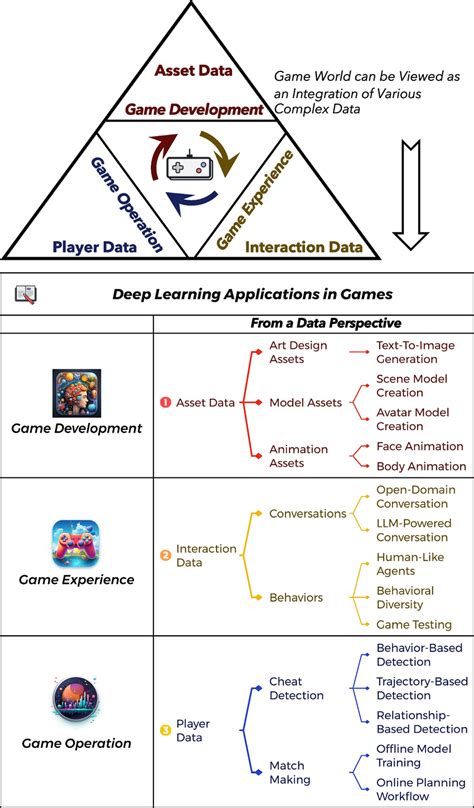

Transforming Raw Data into Actionable Insights

Presenting raw numbers without context or visual aids can be overwhelming. Effective data integration relies heavily on clear, concise, and visually appealing presentation. Bar graphs are excellent for comparing average FPS across different settings or GPUs. Line graphs can illustrate frame time over a period, highlighting stutters. Comparison tables, summarizing performance across various resolutions and graphical presets, are also highly effective.

The goal is to distill complex data into easily understandable insights. Instead of just listing numbers, explain what they mean for the player. For instance, clearly state whether a game is “smooth at 60 FPS on Ultra settings at 1440p with an RTX 4070” or if “significant stuttering occurred when traversing open-world areas, indicated by 0.1% low FPS dipping below 20.”

Contextualizing Performance with Gameplay Experience

While numbers are vital, they shouldn’t exist in a vacuum. The most effective reviews seamlessly weave performance data into the narrative of the gameplay experience. How does a dip in frame rate impact a fast-paced shooter? Does consistent 120 FPS truly enhance the immersion in a strategy game? Connect the technical data to tangible in-game scenarios. This humanizes the numbers and makes the review more relatable and useful to the reader.

For example, a review might note that “despite an average of 70 FPS, the frequent stutters during intense combat sequences, reflected in the 1% low FPS of 35, noticeably hampered responsiveness and enjoyment.” This blend of quantitative data and qualitative experience is the hallmark of a truly insightful PC game review.

Avoiding Common Pitfalls and Ensuring Objectivity

One common mistake is overwhelming the reader with too much granular data without proper summarization or analysis. Another is failing to compare results against a baseline or competing hardware, which diminishes the data’s utility. Ensure that any conclusions drawn are directly supported by the presented data and avoid making subjective performance claims without objective evidence.

Maintaining objectivity also extends to transparent disclosure of any pre-release drivers or game patches used, as these can significantly alter performance. Regular updates to reviews, especially for games that receive substantial post-launch optimization patches, further enhance their long-term value.

Conclusion: Elevating Reviews Through Data-Driven Insights

Integrating crucial PC gaming performance data effectively transforms a good review into an excellent one. By carefully selecting relevant metrics, establishing a transparent and rigorous methodology, presenting data clearly with visual aids, and most importantly, contextualizing these numbers within the actual gameplay experience, reviewers can provide invaluable guidance to the PC gaming community. This data-driven approach not only satisfies the demands of an informed audience but also raises the overall standard of game journalism, making reviews more reliable, actionable, and indispensable.