What are best practices for moderating game mod submissions and user comments?

In the dynamic world of online gaming, player-created modifications (mods) and user comments are vital for community engagement and extending game longevity. However, without proper oversight, they can also introduce security risks, offensive content, or toxicity. Establishing robust moderation practices is essential for maintaining a safe, inclusive, and enjoyable environment for all players.

Establishing Clear Guidelines and Policies

The foundation of effective moderation lies in comprehensive and easily accessible guidelines. These policies should clearly define what is acceptable and unacceptable for both mod submissions and user comments. For mods, this includes technical requirements, content restrictions (e.g., no hate speech, copyrighted material, exploits), and quality standards. For comments, it means outlining expected behavior, prohibiting harassment, spam, and personal attacks. Transparency is key; users should understand the rules before they engage.

- Clearly define acceptable content and behavior.

- Prohibit hate speech, harassment, and illegal content.

- Outline technical and quality standards for mods.

- Ensure policies are easily accessible and understandable.

Implementing a Robust Submission and Review Process

A multi-stage review process helps catch problematic content before it goes live. For mod submissions, this typically involves an initial automated scan for viruses, known exploits, or blacklisted keywords, followed by a manual review by human moderators. Human reviewers can assess subjective elements like artistic quality, potential for abuse, and adherence to the spirit of the game. For user comments, automated filters can flag suspicious content for human review, while a reporting system empowers the community to identify issues.

- Utilize automated screening for technical issues and keywords.

- Conduct thorough manual reviews by trained moderators.

- Implement a staged release process for new mods.

- Provide clear feedback to mod creators on rejections.

Fostering a Healthy Community Environment

Moderation isn’t just about deleting bad content; it’s about nurturing a positive community. Community managers and moderators should actively engage with users, providing positive reinforcement for good behavior and helpful contributions. This includes highlighting exemplary mods and constructive discussions. When issues arise, a consistent and fair approach to enforcement is crucial. Publicly addressing common moderation issues (without shaming individuals) can also educate the community and reinforce standards.

Encouraging self-moderation through community leaders or trusted users can also extend the reach of moderation efforts, provided they are properly vetted and supported. Building a culture of respect and constructive interaction minimizes the need for heavy-handed intervention.

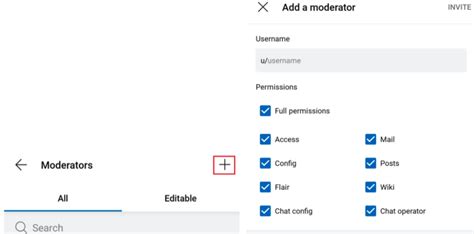

Utilizing Advanced Moderation Tools and Automation

Modern moderation platforms offer a suite of tools that significantly enhance efficiency. AI-powered content filters can detect and flag harmful language, images, or even behavior patterns in real-time. Keyword blacklists and whitelists, spam detection algorithms, and IP blocking are also indispensable. For mods, version control and dependency tracking can prevent issues. These tools free up human moderators to focus on more complex or nuanced cases that require human judgment.

- Deploy AI and machine learning for real-time content filtering.

- Use keyword blacklists, spam detection, and IP blocking.

- Implement reporting systems for community-flagged content.

- Integrate version control for mod updates.

Continuous Review, Adaptation, and Training

The online landscape, and specifically gaming communities, are constantly evolving. Moderation practices must adapt accordingly. Regularly review your guidelines and processes, soliciting feedback from both the community and your moderation team. Train moderators continuously on new threats, policy updates, and best practices for de-escalation and handling sensitive situations. Understanding cultural nuances is also vital in a global gaming community.

Staying informed about industry trends and emerging content types (e.g., AI-generated content) will ensure your moderation strategy remains effective and proactive, rather than reactive.

Effective moderation of game mod submissions and user comments is an ongoing commitment. By combining clear policies, robust processes, smart tools, active community engagement, and continuous adaptation, game developers and community managers can cultivate vibrant, safe, and positive environments where creativity flourishes and players feel valued and respected.