What are best practices for moderating user-created game mods to ensure quality & safety?

User-created game modifications, or “mods,” are a vibrant cornerstone of many gaming communities, extending game longevity and offering boundless creativity. However, this open ecosystem also presents unique challenges for platform holders and game developers: how do you ensure these mods maintain a high standard of quality and, critically, don’t compromise user safety? Effective moderation isn’t just about policing; it’s about fostering a healthy environment where creativity thrives responsibly.

The Dual Imperative: Quality and Safety

Moderating game mods involves a delicate balance. On one hand, players expect mods to be functional, stable, and enhance their gaming experience – this is the quality aspect. Poorly made mods can crash games, introduce bugs, or simply detract from enjoyment. On the other hand, the safety aspect is paramount: protecting users from malicious software (malware, viruses), inappropriate content (explicit, hateful, illegal), and potential exploitation.

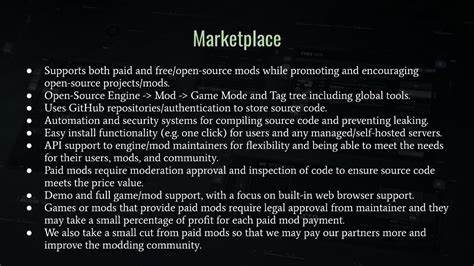

Establishing Clear Guidelines and Policies

The foundation of any successful moderation strategy is a comprehensive set of clear, accessible, and enforceable guidelines for mod creators. These should cover both technical requirements (e.g., file formats, performance standards, compatibility) and content restrictions (e.g., no hate speech, copyrighted material, sexually explicit content, or real-world political extremism). Transparency is key; modders need to understand exactly what is expected of them and the consequences of non-compliance.

Technical Specifications and Performance

Guidelines should detail expected technical standards to prevent game instability. This includes recommendations for optimization, acceptable asset sizes, and compatibility with the base game’s various versions. Providing tools or templates can further assist modders in meeting these standards, reducing the review burden later.

Content Restrictions and Ethical Standards

Beyond technicalities, robust content policies are crucial. These must explicitly forbid content that is illegal, harmful, or offensive. This includes malware, cheats/hacks that disrupt fair play, discriminatory content, and overly violent or sexual material that falls outside the game’s established rating or community norms. Regular review and updates to these policies are essential as community standards evolve.

Implementing a Multi-Tiered Review Process

Given the potential volume of submissions, a multi-tiered approach combining automated and human moderation is often the most effective.

Automated Scanning and Pre-screening

Initial checks can leverage automated tools for file integrity, virus and malware scanning, and basic content filtering. AI and machine learning can assist in identifying suspicious file types, known malicious code patterns, or flag content that deviates significantly from acceptable norms based on keywords or image analysis. This helps to filter out low-effort or overtly harmful submissions before they reach human eyes.

Dedicated Human Moderation Teams

While automation is powerful, human review remains indispensable. Trained moderation teams are essential for nuanced content evaluation, ensuring compliance with subjective content policies, and assessing overall mod quality. These teams should be familiar with the game, its lore, and the community’s culture. They can also provide valuable feedback to mod creators, fostering improvement.

Community Reporting and Peer Review

Empowering the community to report problematic mods is a powerful tool. Robust reporting systems, combined with clear guidelines on what constitutes a reportable offense, can significantly augment official moderation efforts. For certain platforms, a “peer review” system where trusted community members or established modders can vet submissions might also be considered, though this requires careful oversight to prevent abuse.

Ensuring User Safety: Beyond Content

Safety extends beyond content to the integrity of the user’s system and data.

Malware and Virus Protection

Every mod file should undergo rigorous, up-to-date virus and malware scanning before being made available for download. This is non-negotiable. Regular re-scans of existing mods are also prudent, as threats evolve.

Privacy and Data Security

Platforms hosting mods should ensure that mods cannot access or compromise user personal data or system functionalities beyond what is strictly necessary for their operation. Sandboxing environments or strict API limitations can help mitigate these risks.

Transparency, Communication, and Feedback

A healthy modding ecosystem thrives on clear communication. Developers should be transparent about their moderation processes, explain decisions (especially rejections or removals), and provide avenues for modders to appeal decisions or seek clarification. Offering resources, tutorials, and direct support to mod creators can significantly improve the quality of submissions over time.

Long-Term Strategy: Adaptability and Evolution

The world of user-generated content is constantly evolving. Best practices for mod moderation are not static; they require continuous adaptation. Regularly reviewing policies, updating moderation tools, and staying engaged with both mod creators and the wider player base are crucial for maintaining a safe, high-quality, and thriving modding community for years to come.