What’s the best method for gaming reviews to consistently benchmark PC performance?

The Imperative of Consistent PC Performance Benchmarking

In the competitive landscape of PC gaming, discerning hardware performance is crucial for consumers making informed purchasing decisions. For reviewers, the challenge lies in providing data that is not only accurate but also consistently repeatable across different tests and hardware configurations. Inconsistent benchmarking can mislead readers, erode trust, and diminish the value of a review. Establishing a robust, standardized methodology is paramount to delivering credible and comparable results.

Foundation: Establishing a Standardized Test Environment

The cornerstone of consistent benchmarking is a meticulously controlled test environment. This begins with a dedicated test bench, ensuring that all components (CPU, GPU, RAM, storage, cooling) remain constant unless they are the specific item being reviewed. Factors like case airflow, ambient temperature, and power delivery can all subtly influence performance, making an open-air test bench or a highly controlled chassis ideal for minimizing variables.

Equally critical is software consistency. A clean, freshly installed operating system (Windows 10/11) with minimal background processes is essential. All drivers (chipset, GPU, audio) must be at specific, noted versions, and auto-updates should be disabled during testing. This prevents performance fluctuations due to unforeseen driver changes or background system tasks, ensuring that observed differences truly stem from the hardware or game under scrutiny.

Mastering In-Game Performance Capture

Once the environment is stable, the focus shifts to capturing reliable in-game performance data. This involves selecting specific, reproducible test sequences within games that are representative of actual gameplay and sufficiently demanding. Many games include built-in benchmarks, which are excellent for consistency, provided they accurately reflect real-world scenarios. For games without built-in tools, reviewers must define and execute precise, repeatable manual walkthroughs.

The choice of monitoring tools is also vital. While some GPU drivers offer overlays, dedicated third-party tools like OCAT, CapFrameX, or PresentMon provide more granular and accurate frame time data. These tools allow for precise logging of performance metrics over a defined period, capturing not just average frame rates but also critical data points like 1% and 0.1% low FPS, which better indicate stuttering and overall smoothness.

Key Metrics for Comprehensive Analysis

Raw average frames per second (FPS) is a primary metric, but it tells only part of the story. A comprehensive review delves deeper into the performance data:

- Average FPS: Provides a general indication of performance.

- 1% Lows: The average FPS of the lowest 1% of frames rendered, indicating minor stutters or dips.

- 0.1% Lows: The average FPS of the lowest 0.1% of frames rendered, highlighting more significant, noticeable stutters.

- Frame Times: The time it takes to render each individual frame (measured in milliseconds). Consistent, low frame times equate to a smoother gaming experience, even if average FPS is high. Significant spikes in frame times are indicative of stuttering.

Analyzing these metrics together provides a holistic view of performance, differentiating between systems that merely achieve high average FPS and those that deliver genuinely fluid gameplay.

Ensuring Repeatability and Accuracy

To further bolster consistency, several practices should be rigorously followed:

- Multiple Test Runs: Perform at least three to five runs for each benchmark sequence and average the results. This mitigates outliers and ensures the data is robust.

- Document Everything: Maintain detailed logs of every test parameter, including OS version, game version and patches, driver versions, specific in-game settings (resolution, texture quality, anti-aliasing, etc.), and even ambient room temperature.

- Warm-Up Periods: Allow the system to warm up under load for a short period before commencing benchmarks to ensure components have reached their typical operating temperatures.

- Thermal Monitoring: Continuously monitor CPU and GPU temperatures to identify any thermal throttling that might skew results.

These diligent practices minimize external variables and maximize the reliability of the collected data.

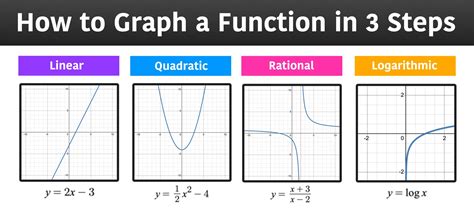

Presenting Benchmarking Data Effectively

The final step is to present the complex data in an easily digestible and insightful manner. Clear, well-labeled graphs and charts are essential for comparing performance across different hardware configurations or game settings. Beyond raw numbers, reviewers should contextualize the data, explaining what average FPS, 1% lows, and frame times mean for the actual gaming experience. Discussing perceived fluidity, responsiveness, and any noticeable stutters helps bridge the gap between numerical data and real-world user experience.

The Evolving Art of Performance Reviewing

Consistent PC performance benchmarking is both a science and an art. While the science dictates rigorous methodology and data collection, the art lies in interpreting these numbers and translating them into meaningful insights for the reader. As games and hardware continually evolve, so too must benchmarking methodologies. Reviewers must remain adaptable, continuously refining their processes to ensure their data remains relevant, accurate, and consistently serves the needs of the gaming community.