How to effectively moderate toxic gaming community comments & promote positive engagement?

The Challenge of Online Toxicity in Gaming Communities

Gaming communities, vibrant hubs of shared passion, can unfortunately also become breeding grounds for toxicity. From hateful slurs and harassment to incessant trolling and griefing, negative interactions can quickly drive away players, tarnish a game’s reputation, and undermine the very essence of communal play. Effectively moderating these environments is not just about enforcing rules; it’s about cultivating a space where players feel safe, respected, and eager to engage positively.

The goal is a delicate balance: quashing negativity without stifling genuine discussion or player expression. Achieving this requires a multi-faceted approach, combining clear guidelines, robust tools, and a proactive strategy for fostering positive interactions.

Establishing Clear, Visible, and Enforceable Guidelines

The foundation of any healthy community is a well-defined set of rules. These guidelines must be clear, concise, easily accessible, and explicitly outline what constitutes acceptable and unacceptable behavior. Ambiguity breeds confusion and inconsistency, making moderation difficult and often perceived as unfair.

- Specificity: Instead of vague terms like “be nice,” define what “nice” means in context (e.g., “No personal attacks or hate speech”).

- Visibility: Rules should be prominently displayed in-game, on forums, and any community platforms.

- Consequences: Clearly state the progressive disciplinary actions for rule violations, from warnings to temporary bans and permanent exclusions.

- Community Input: Periodically review and update rules, sometimes even soliciting feedback from the community to ensure they remain relevant and fair.

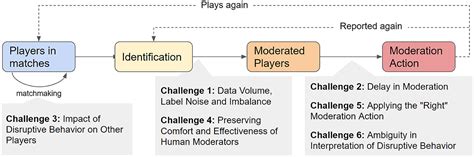

Leveraging Robust Moderation Tools and Trained Teams

Modern toxicity often requires modern solutions. Manual moderation alone can be overwhelming, making technology a crucial ally.

Automated and AI-Powered Tools

Employing AI for keyword filtering, sentiment analysis, and pattern recognition can proactively flag or even automatically remove problematic content before human moderators even see it. These tools can handle the bulk of obvious violations, freeing up human moderators for more nuanced cases.

Dedicated and Well-Trained Human Moderators

While AI is powerful, human judgment is irreplaceable. A team of trained moderators is essential for understanding context, applying discretion, and engaging directly with the community. Training should cover not just the rules, but also de-escalation techniques, cultural sensitivities, and consistent application of policies.

Strategies for Promoting Positive Engagement

Moderation isn’t just about punishment; it’s equally about nurturing positive interactions. A healthy community actively promotes good behavior.

Highlighting and Rewarding Positive Contributions

Acknowledge and celebrate players who contribute positively. This could involve in-game titles, forum badges, shout-outs, or even direct recognition from developers. Publicly rewarding good behavior encourages others to emulate it.

Organizing Community Events and Initiatives

Host regular events, contests, and collaborative challenges that encourage teamwork, friendly competition, and positive interaction. These activities provide structured opportunities for players to engage constructively and build camaraderie.

Empowering Community Leaders and Mentors

Identify and empower positive, influential members of your community. These individuals can serve as peer mentors, forum guides, or even junior moderators, setting a positive example and helping to steer discussions in constructive directions.

Creating Dedicated Channels for Feedback and Discussion

Provide safe, monitored spaces for players to voice concerns, offer feedback, and engage in constructive discussions. This shows players that their opinions are valued and helps to prevent frustrations from boiling over into toxic outbursts in public channels.

Consistency, Transparency, and Adaptability

For moderation to be effective and trusted, it must be consistent. Players need to know that rules apply equally to everyone. While complete transparency on every moderation action might not be feasible, communicating general trends, policy updates, and the rationale behind moderation decisions can build trust. Finally, community dynamics evolve. Regularly review your moderation strategies, analyze the types of toxicity prevalent, and adapt your tools and approaches accordingly. What worked last year might not work today.

Conclusion: Building a Thriving Digital Ecosystem

Effectively moderating toxic gaming community comments and promoting positive engagement is an ongoing commitment. It requires a blend of technology, human empathy, clear communication, and a proactive approach to community building. By investing in these areas, game developers and community managers can transform potentially hostile environments into vibrant, welcoming spaces where players can truly connect, collaborate, and enjoy their shared passion for gaming.